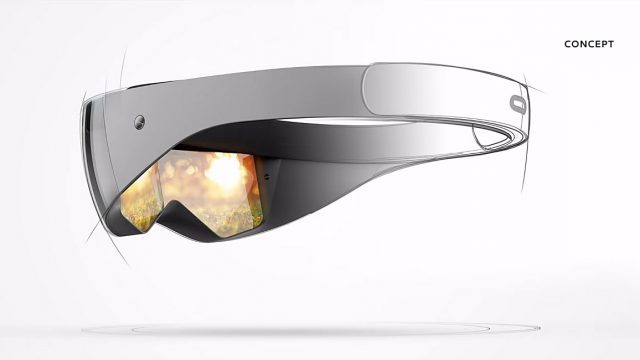

Varjo is a relatively new name to the VR scene, but the company is certainly making a buzz, having quickly raised some $15 million in venture capital touting the promise of delivering a VR headset achieving retina resolution at the center of the field of view. But how exactly does it work? A new graphic shows the key tech behind the company’s headset.

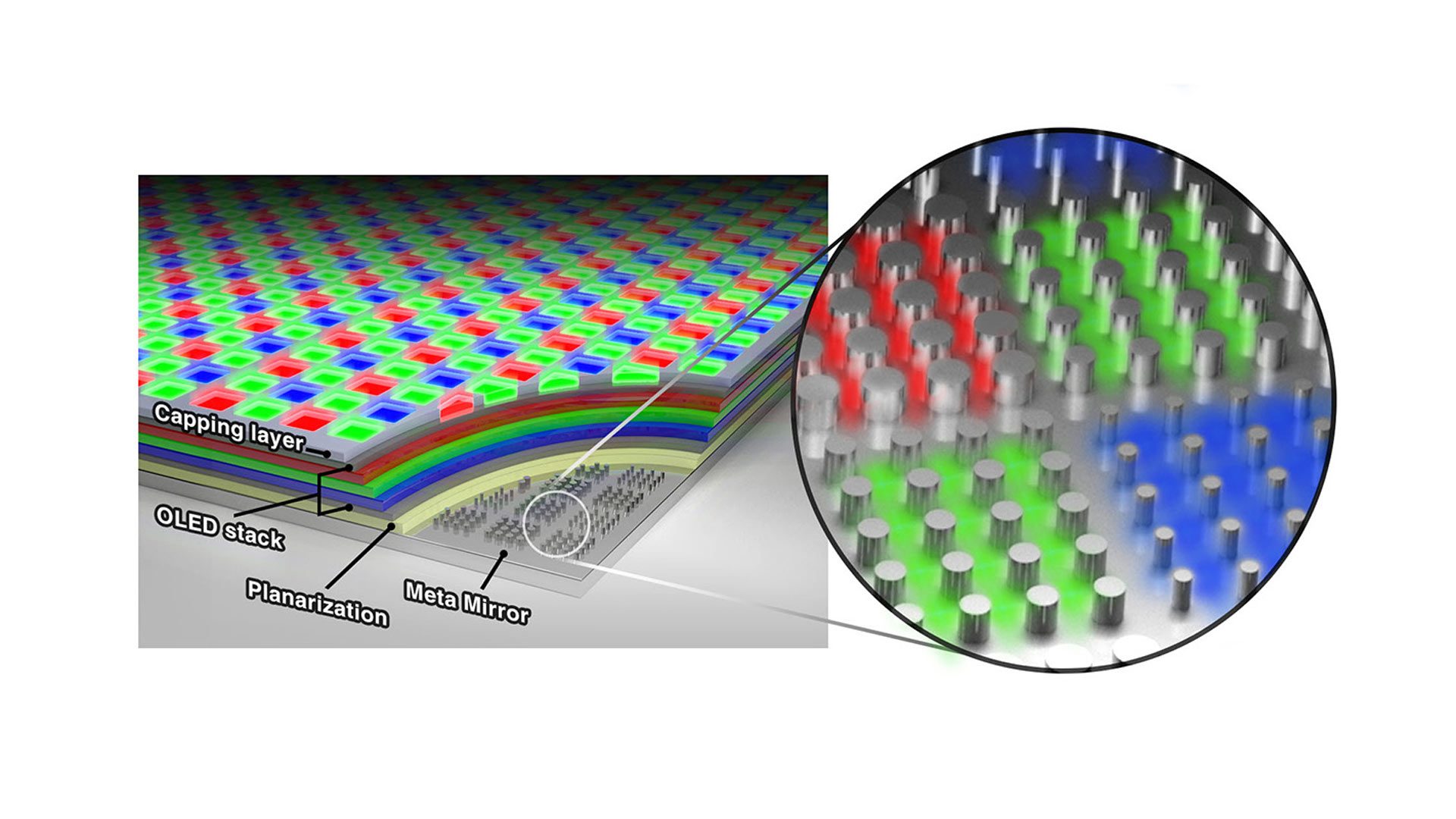

Today there’s primarily two choices when it comes to the kind of displays to use in VR headsets. The first is a traditional display like the kind that you find in your smartphone. The problem with traditional displays today is that the pixels aren’t yet small enough to be truly invisible. Then there’s microdisplays, which have incredible pixel density, but the displays themselves can’t yet be made large enough for very wide field of view in a VR headset.

So until either traditional displays can shrink their pixels drastically, or microdisplays can be easily made much larger, we’re still quite a way from achieving ‘retina resolution’—having such tiny pixels that they’re invisible to the naked eye—in highly immersive VR headsets.

But Varjo hopes to deliver a stopgap which combines the advantages of traditional displays (wide field of view) with those of microdisplays (high pixel density), to deliver a VR headset with retina resolution (at least in a small portion of the overall field of view).

Combining Displays

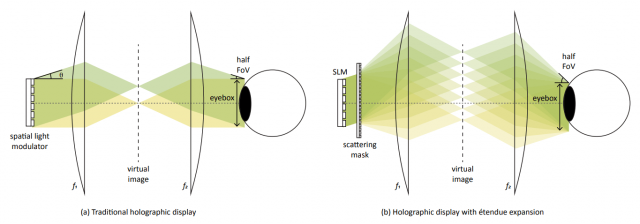

The underlying concept is illuminated in a recent animated graphic from the company’s site:

Above you can see (right to left) a diagram of the viewer’s eye, a traditional lens, a moving refraction optic (above), a microdisplay (below), and a traditional display.

As you can see, the refraction optic can move the reflected microdisplay image onto the corresponding section of the traditional display. The idea is that the reflected high-resolution image will always be positioned at the very center of the user’s gaze, with the help of precision eye tracking, while the lower resolution traditional display will fill out the peripheral view where your eye can’t see nearly as much detail. This is very similar to software foveated rendering, except in this case it’s almost like moving the pixels themselves to where they are needed, instead of just rendering in higher quality in a specific area.

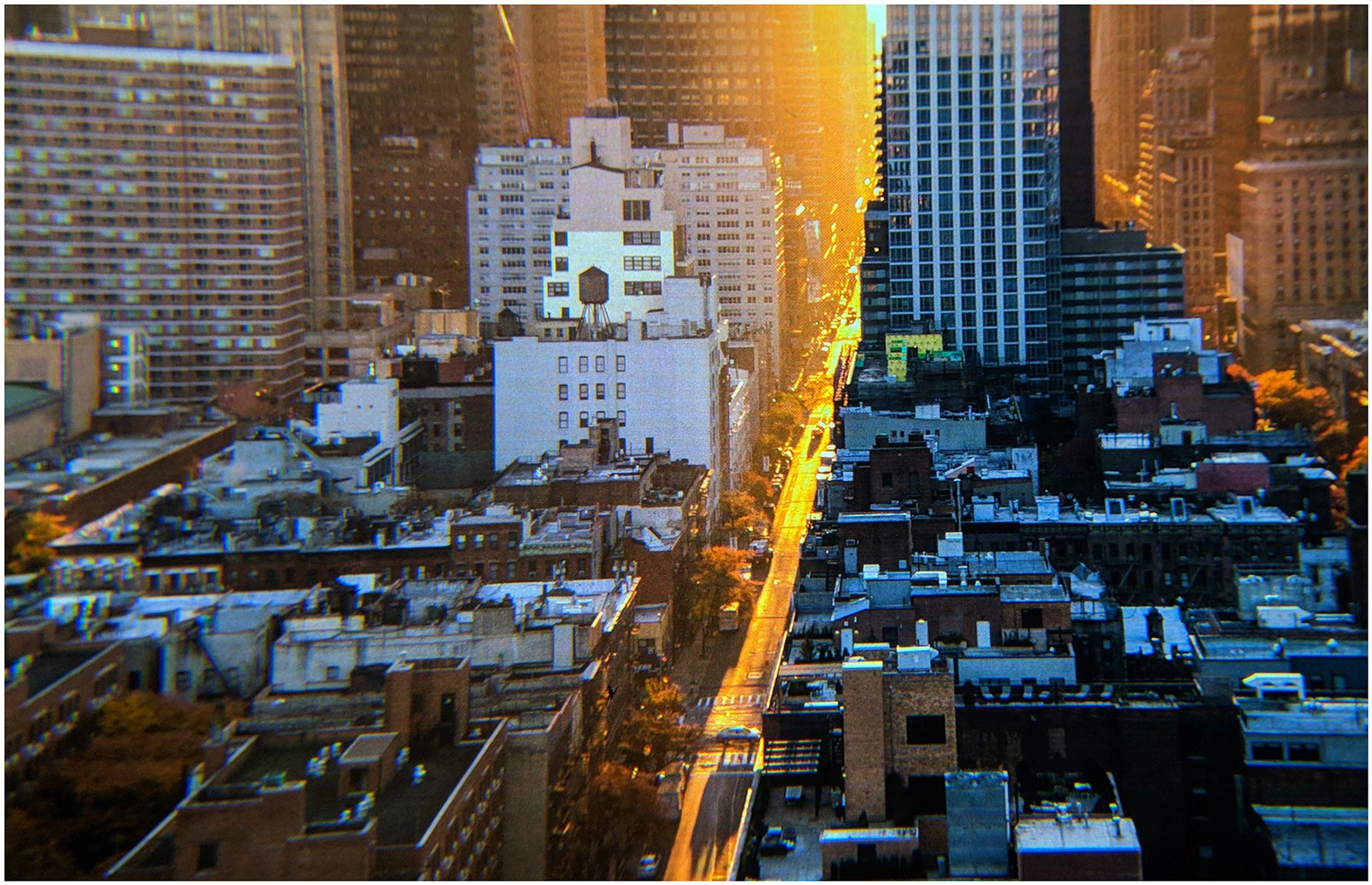

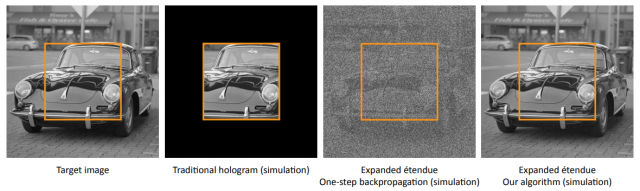

This example image from Varjo shows the difference in visual quality between the image from the traditional display and the microdisplay (click to zoom):

Moving the High-res Region

The big questions of course: how do you move the refraction optic quickly enough to keep up with the movements of the eye, reliably, and in a space compact enough for a reasonably sized VR headset?

For the former, the answer may lie somewhere in the company’s key patent, Display Apparatus and Method of Displaying Using Focus and Context Displays, which describes “actuators” that could be involved in the various moving parts. Additional hints pertaining to how the company hopes to achieve this are likely found in Varjo’s job listing for a “Miniature Mechatronics Expert:”

You will be responsible of designing the actuators and motor controls for our mixed reality device. […] You will participate the development of leading edge motor technologies and design novel actuator mechanics to harness the power of custom designed optics, motors and electronics to reach new fronts in miniature mechatronics.

[…]

Responsibilities

- Create motor position control algorithms

- Design position encoder system

- Set performance targets and requirements for motor units

- […]

Plans ‘A’ Through ‘H’

The patent, which refers to the traditional display as the “context display” and the microdisplay as the “focus display,” actually covers a wide range of possible incarnations of the tech using varying methods for combining the microdisplay image with the traditional display image, including the use of waveguides, additional prisms, and other display technology entirely, like projection.

Correcting Artifacts

Another big question: what artifacts will the optical combination process introduce to both the reflected micro display image and the image from the traditional display?

The patent also touches on that (a clear indicator that artifacts will indeed need to be contended with); it suggests a number of techniques which could help to eliminate distortions, including masking the region of the traditional display that’s directly behind the reflected display, dimming the seams of the two display regions to try to create a more even transition from one to the other, and even intelligently fitting the transition seams to portions of the rendered scene, connecting them like puzzle pieces in an effort to make the seams less pronounced.

– – — – –

Varjo has laid out a number of interesting methods for pulling off their “bionic display.” Of course, the devil is always in the details—we’ll be looking forward to our first chance to try their latest prototype and see what it really looks like in practice.

The post The Key Technology Behind Varjo’s High-res ‘Bionic Display’ Headset appeared first on Road to VR.